Many companies are forced to use a file server in their work. It can be considered one of the most inefficient, since often, in addition to the necessary data, such a server contains a huge amount of “unnecessary information”: duplicate files, old backups, etc. The availability of such files does not depend on the server, but on the way, the storage structure is organized. For example, very often the database stores file templates that differ in several bits of information. As a result, the amount of data used is constantly increasing, which increases the need for additional devices for storing backups. The way to deal with this problem is to conduct data deduplication. With the help of the procedure, it is possible to eliminate redundant copies and reduce the need for storage space. As a result, storage capacity is optimized and the use of additional devices is avoided.

Data deduplication is a method of removing duplicated documents and records, lowering the overall costs of holding this data. You can use this technique to achieve the better potential of any database system.

Data deduplication has several levels of execution:

- bytes;

- files;

- blocks.

Each such method has its own positive and negative sides. Let’s look at them in more detail.

Table Of Contents

- 1 Data Deduplication Methods

- 1.1 Block Level

- 1.2 File Level

- 1.3 Byte Level

- 2 Deduplication During Backup

- 2.1 Client-server

- 2.2 Deduplication on the server

- 2.3 Deduplication on the client

- 3 Advantages and Disadvantages

- 4 Scope of Application

Data Deduplication Methods

Block Level

It is considered the most popular way of deduplication, and implies the analysis of a part of the data (file), with further preservation of only unique repetitions of information for each individual block. A block, in this case, is considered to be one logical unit of data with a characteristic size, which may vary. All data during block-level deduplication is processed using hashing (for example, SHA-1 or MD5).

Hash algorithms allow you to create and store a certain signature (identifier) in the deduplication database, which corresponds to each individual unique block of data. So, if the file is changed over a certain period of time, then not the whole file will get into the data warehouse, but only its modified blocks.

There are 2 types of block deduplication – with variable and constant block lengths. The first option involves distributing files into blocks, each of which may have a different size.

This option is more effective in terms of reducing the amount of stored data than when using deduplication with a constant block length.

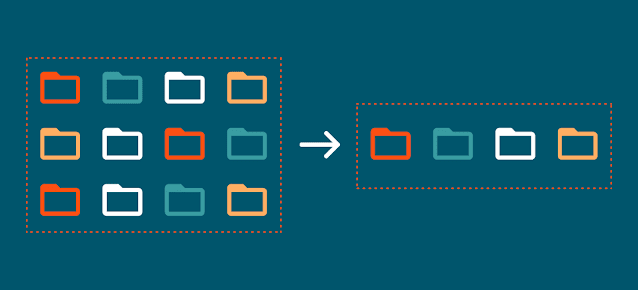

File Level

This method of deduplication involves comparing a new file with an already saved one. If any unique file comes across, it will be saved. If the file that comes across is not new, then only the link (a pointer to this file) will be saved.

That is, with this type of deduplication, only one version of the file is recorded, and all future copies of it will receive a pointer to the original file. The main advantage of this method is the simplicity of implementation without serious performance degradation.

Byte Level

By its principle, it is similar to the first deduplication method in our list, but instead of blocks, a byte-by-byte comparison of old and new files is used here. This method is the only one in which you can guarantee the maximum elimination of duplicate files.

However, deduplication at the byte level has a significant disadvantage: the hardware component of the machine on which the process is running must be extremely powerful since higher requirements are imposed on it.

Deduplication During Backup

The procedure for removing duplicates is often performed in the process of saving a backup copy. Moreover, the process may differ in the place of execution, the source of information (the client), and the method of storage (the server used).

Client-server

This is a combined option, in which the event can be performed both on the client and on the server itself. Before sending information to the server, special software tries to determine which information has already been recorded. As a rule, block-type deduplication is used. A hash is calculated for a separate block of information, and a list of hash keys is sent to the server. At the server level, a key comparison is performed, after which the client receives the necessary data blocks. When using such a solution, the overall load on the network is reduced, since only unique files are transferred.

Deduplication on the server

Now let’s talk about how to deduplicate data on the server. This option is used in cases where information is transmitted to the device without processing. A software or hardware data validation procedure can be performed. Deduplication software involves the use of special software, which launches the required processes. With this approach, it is important to take into account the load on the system, as it may be too high. The hardware type combines special solutions based on deduplication and backup procedures.

Deduplication on the client

When creating a backup, data replication on the source itself is possible. This method allows you to use only the client’s own power. After data verification, all files are sent to the server. Data deduplication on the client requires special software. The disadvantage of the solution is that it leads to an increased load of RAM.

Advantages and Disadvantages

The positive aspects of deduplication, as a process, include the following points:

- Highefficiency. The data deduplication process reduces the need for storage capacity by 20-30 times.

- Theprofitabilityofapplicationswithlownetworkbandwidth. Which is due to the transfer of exclusively unique data.

- The capacitytocreatebackupsmoreoften and save data backups for longer.

The weaknesses of deduplication include:

- The probabilityofadataclash if a pair of various blocks produce the same hash key at the same time. In this case, database corruption may happen, which will lead to failures during restoration from a backup. The bigger the data system will be, the more the risk of a conflict scenario. The issue can be fixed by increasing the hash storage.

Scope of Application

For sure, many people have a legitimate question about why data deduplication is needed and whether it is possible to do without it. As practice shows, sooner or later you still have to resort to data deduplication. Over time, copies and duplicates of files can take up 2-3 times more space than the original files, so you need to delete unnecessary data. Especially often, the deduplication process is used by developers in the backup market.

In addition, the technology is often used on servers of a productive system. In this case, the procedure can be performed by OS tools or additional software.

Comments

Post a Comment